CW3E Publication Notice

On the use of hindcast skill for merging NMME seasonal forecasts across the western U.S.

October 17, 2024

A new paper entitled “On the use of hindcast skill for merging NMME seasonal forecasts across the western U.S.” was recently published in Weather and Forecasting by William Scheftic, Xubin Zeng, Michael Brunke, Amir Ouyed and Ellen Sanden from the University of Arizona and Mike DeFlorio from CW3E. This paper completes a series of experiments that test the impact on merged and post-processed seasonal forecasts of temperature and precipitation for hydrologic subbasins across the western U.S. by using different strategies to apply weights to each model from the North American Multi-Model Experiment (NMME). The results from this paper have been used to improve the UA winter forecasts that have been hosted at CW3E for the past three winters, and this effort supports CW3E’S 2019-2024 Strategic Plan by seeking to improve seasonal predictability of extreme hydroclimate variables over the western U.S. region.

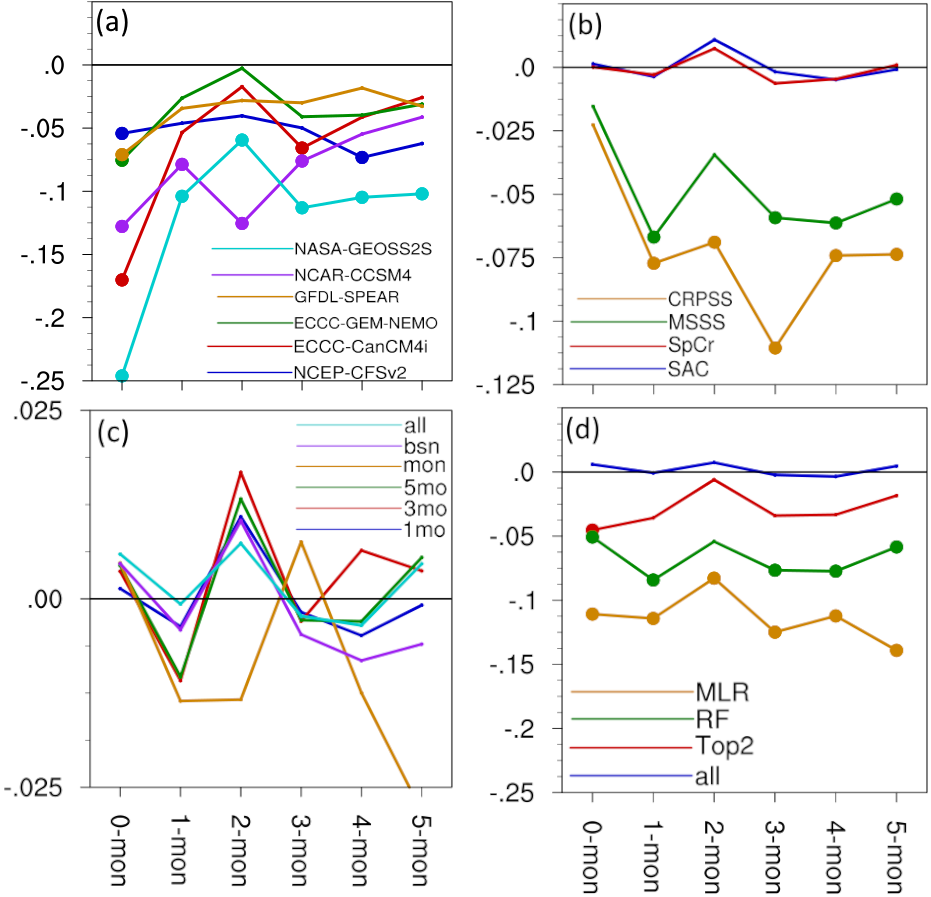

This research contributed to our understanding of how effective the past performance of multiple models can be used for seasonal forecasting over the western U.S. as highlighted in Figure 1, where we focus only on precipitation since the results did not differ significantly from similar results obtained with temperature. First (Figure 1a), we show that consistent with past research a simple equal weighted combination of NMME models used in the study outperforms the individual models across all lead months. Second (Figure 1b), using correlation-based metrics for past performance as the weights in combining all NMME models performs better than skill score-based metrics. Third (Figure 1c), the strategy of pooling past performance using multiple months and basins did not have a significant impact on the performance for the multi-model forecast. Finally (Figure 1d), we show that overall, the weighted multi-model forecasts using prior performance did not significantly outperform the equal weighted method, and other methods such as multiple linear regression and random forecast significantly underperformed the equal weighted forecasts. If an offset is added to the prior performance metric that nudges the multi-model merging closer to equal weighting, an optimal weighting may improve over both equal weighting and the original weighted merging. An offset for the weighted multi-model merging is now being used for the UA winter outlooks to further improve these seasonal forecasts.

Figure 1. Median difference of standardized anomaly correlation (SAC) for monthly precipitation forecasts across 500 resampled sets of validation years, all months and HUC4 subbasins according to lead month (x-axis) between each individual model and Equal Weighting (EQ) of six models (a), by prior metric used for merging weights. Prior metrics: Standardized anomaly correlation (SAC), Spearman rank correlation (SpCr), MSE-based skill score (MSSS), ranked probability skill score (CRPSS) (b), by training data pooling strategy used for merging weights. Strategies: no pooling (1mo), 3 month moving window (3mo), 5 month moving window (5mo), all months pooled together (mon), all basins pooled together (bsn), all months and basins pooled together (all) (c), by methods used for merging weights. Methods: correlation of all months and basins pooled together (all), only the top two models from training are kept when merging by weights (Top2), random forest model that incorporate each model’s mean and spread as predictors (RF), multiple linear regression model that incorporate each model’s mean as predictors (MLR) (d). Leads showing significant difference from EQ at the 95% level (two-sided) are depicted with filled dots, otherwise no dots are drawn. Adapted from Scheftic et al. (2024).

Scheftic, W. D., X. Zeng, M. A. Brunke, M. J. DeFlorio, A. Ouyed, and E. Sanden, 2024: On the use of hindcast skill for merging NMME seasonal forecasts across the western U.S. Weather and Forecasting, https://doi.org/10.1175/WAF-D-24-0070.1